CSCI 6962/4140 Machine Learning and Optimization, Fall 2024

Overview

Machine learning and data science typically proceed by formulating the desired outcome as the solution to an optimization problem, then using suitable algorithms to solve these problems efficiently. Neural networks (aka deep learning) have become the dominant paradigm of modern machine learning, and their impressive successes are due to four factors:

- the availability of massive training data sets,

- access to teraFLOPs of compute power,

- powerful general purpose stochastic optimization algorithms, and

- well-designed neural architectures.

As a second course in machine learning, this course focuses on the optimization algorithms used in machine learning and the neural architectures used in modern deep learning.

The first portion of the course introduces the optimization background necessary to understand the optimization algorithms that dominate applications of ML and large-scale optimization, and surveys several popular randomized and deterministic optimization algorithms, placing the emphasis on those widely used in ML applications.

The second portion of the course introduces architectures widely used in modern machine learning because of the proven effectiveness of their inductive biases, and presents common regularization techniques used to mitigate the issues that arise in solving the nonlinear optimization problems ubiquitous within modern machine learning.

The homeworks involve hands-on applications and empirical characterizations of the behavior of these algorithms and model architectures. A project gives the students experience in critically reading the research literature and crafting articulate technical presentations.

Course Logistics

The syllabus is available as an archival pdf, and is more authoritative than this website.

Instructor: Alex Gittens (gittea at rpi dot edu)

Lectures: MTh 2-4pm ET in Lally 104

Questions and Discussions: Piazza

Office Hours: M 4-5pm ET and Th 10-11am ET in Lally 316

TA: Vijay Sadashivaiah (sadasv2 at rpi dot edu)

TA Office Hours: Tuesday 3-4pm WebEx

Course Text: None

Grading Criteria:

| CSCI 4140 | CSCI 6962 |

|---|---|

|

|

Letter grades will be computed from the semester average. Lower-bound cutoffs for A, B, C and D grades are 90%, 80%, 70%, and 60%, respectively. These bounds may be moved lower at the instructor's discretion.

Lecture Schedule

- Lecture 1, August 29/2024. Course logistics; introduction to optimization; ordinary least squares, gradients and their computation; why gradient descent is not always the best optimization algorithm. Lecture notes. Code comparing gradient descent and conjugate gradient descent for solving linear systems.

- Lecture 2, September 3/2024. Challenges of optimization; machine learning as finding predictable patterns from a set of data; stages of machine learning; support vector machines for binary classification; weight decay and regularization; empirical risk minimization. Lecture notes.

- Lecture 3, September 5/2024. Review of probability essentials: joint distributions, marginals, conditional distributions, expectation, conditional expectation, variance, independence, conditional independence; parameterized machine learning models. Lecture notes.

- Lecture 4, September 9/2024. Gaussian, bernoulli, and categorical noise models, respectively, for regression, binary classification, and multiclass classification. Maximum likelihood estimation for fitting parameterized ML models with these noise models; specific examples of ordinary least squares and logistic regression.Lecture notes.

- Lecture 5, September 12/2024. MLE for fitting a multiclass classification model. KL-divergence and the cross-entropy loss. Training, testing, and validation splits, and the general workflow for supervised ML. Linear algebra quick review: linear independence, spans, bases, inner products, Cauchy-Schwarz inequality, high-dimensional angles, law of cosines, Pythagorean theorem, outer products, symmetric matrices, eigenpairs, eigenvalue decomposition, positive semidefiniteness. Lecture notes.

- Lecture 6, September 16/2024. Taylor Series review; gradients and Hessians; oracle models for optimization: zero, first, and second-order; convexity of sets and functions; convex optimization problems; examples of convex sets. Lecture notes.

- Lecture 7, September 19/2024. Sketch of the proof that local minimizers of convex optimization problems are global minimizers; strict convexity and strong convexity; first and second-order characterization of convex functions, with worked examples; operations that preserve convexity of functions; examples of convex optimization problems. Lecture notes.

- Lecture 8, September 23/2024. optimality conditions for smooth unconstrained and constrained convex optimization problems (Fermat's condition). Example of minimizing l2-regularized logsumexp using the optimality condition. Example of the optimality condition for projecting onto a convex set. The projection onto the unit Euclidean ball. Lecture notes.

- Lecture 9, September 26/2024. Projection operator is contractive; subdifferentials and subgradients, with examples; the general Fermat's condition for optimality of nonsmooth convex optimization problems; extended value convex functions; proper convex functions; convex indicator functions; normal cone to a convex set at a point, and subdifferentials of convex indicator functions; subdifferential calculus rules. Lecture notes.

- Lecture 10, September 30/2024. Example computing the subdifferential of l1-regularized hinge-loss SVM; example using Fermat's condition to find the projection onto the unit l∞-ball; gradient descent; notions of convergence: ε-suboptimality, ε-stationarity, parameter convergence; β-smoothness of functions; descent lemma, step-size choice, and convergence guarantees for gradient descent; iteration complexity and convergence rate; projected gradient descent; subgradient descent. Lecture notes. Optional supplement: Chapter 3 of Bubeck, Convex Optimization.

- Lecture 11, October 3/2024. Kernel learning: Fermat's condition implies the optimal parameters lie in the subspace spanned by the data, kernels associated with feature maps, posing the optimization in terms of the kernel matrix, predicting in terms of the kernel function and the training data. Strong convexity and gradient smoothness characterized using the Hessian; the Polyak-Lojasiewicz (PL) inequality for strongly convex functions; the convex condition number; linear convergence of GD for functions with finite convex condition number; convergence rates for different classes of convex functions. Lecture notes.

- Lecture 12, October 7/2024. Newton's method, guarded and unguarded; minibatch stochastic gradient descent (sgd) and randomized reshuffled minibatch stochastic gradient descent; convergence of iterates for sgd on strongly convex objectives; convergence rate of sgd to approximate stationary on nonconvex objectives, and stepsize scheduling choices for sgd. Lecture notes.

- Lecture 13, October 10/2024. Tips for tuning stepsizes for SGD. Adaptive Gradient descent; principles: momentum, exponential averaging, preconditioning; Popular algorithms: SGD w/ momentum, Nesterov's accelerated gradient descent, AdaGrad, RMSProp, ADAM. Lecture notes.

- Lecture 14, October 17/2024. Motivations for using neural network models. Neural networks as directed acyclic computational graphs. Fully-connected feed-forward neural networks (FCFNNs), perceptron-type neurons, and multilayer perceptron neural networks (MLPs). Activation functions and common choices for them. Linear, logistic, and multiclass logistic regression as MLPs. XOR as an example of a problem that is not linearly separable, and example of using a three-neuron MLP to represent XOR. Universal approximation theorem for non-polynomial activation functions. Vector notation for computing the outputs of MLPs. Lecture notes. Two-layer MLP for classifying FashionMNIST images, in PyTorch. Supplement: A guide to convolution arithmetic for deep learning.

- Lecture 15, October 21/2024. Autoencoders as nonlinear generalization of PCA, as unsupervised approaches to learning representations useful for downstream learning tasks. Backpropagation (BP) as a systematic application of the chain rule to computational graphs. The multivariate chain rule and Jacobians. The BP algorithm for MLP networks. Lecture notes. An autoencoder for learning useful representations for classifying MNIST images.

- Lecture 16, October 24/2024. Convolutional neural networks (CNNs): convolutional filters, inductive biases, convolutional layers, multichannel convolutional layers, fully connected layers, pooling, LeNet5. Lecture notes. LeNet5 implementation in Pytorch for classifying FashionMNIST images.

- Lecture 17, October 28/2024. Transposed convolutions and the U-Net architecture for a fully convolutional approach to pixel-level tasks. Efficiently computing convolutions using matrix multiplication, and backpropagation in CNNs. The phenomenon of vanishing and exploding gradients in deep learning, its causes, and hints of mitigation strategies. Inception blocks for incorporating multiscale information in CNNs, using 1-by-1 convolutions for dimensionality reduction, and using auxiliary losses to mitigate the vanishing and exploding gradients issue. Lecture notes. Going deeper with convolutions, the paper that introduced the Inception v1 architecture. U-Net: Convolutional Networks for Biomedical Image Segmentation, the paper that introduced the U-Net architecture. Anatomy of a high performance convolution, a well-written blog post looking at the methodology and efficiency of computing convolutions.

- Lecture 18, October 31/2024. Batch and Layer Normalization for smoother propagation of gradient information; modified batch normalization for CNNs. Skip connections and ResNets for the same. Lecture notes. PyTorch demonstration of the impact of post- and pre-activation batch normalization. References: Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift, the paper that introduced batch normalization; Layer Normalization, the paper that introduced layer normalization; Deep Residual Learning for Image Recognition, the paper that introduced residual networks/connections.

- Lecture 19, November 4/2024. Dropout as a form of regularization that helps prevent overfitting by avoiding co-adaptation of features; interpretation as a computationally efficient approximation to model ensembling. Recurrent Neural Networks (RNNs) for modeling dynamical systems; design patterns for RNNs; example of RNN-based architecture for part-of-speech tagging; vanilla (Elman) RNNs; losses for RNN training; Backpropagation through Time (BPTT) and truncated BPTT. Lecture notes. Pytorch demonstration of the impact of Dropout. References: Dropout: A Simple Way to Prevent Neural Networks from Overfitting, the paper that introduced Dropout. Recurrent Neural Networks and Long Short-Term Memory Networks: Tutorial and Survey, a nice book chapter surveying RNNs.

- Lecture 20, November 7/2024. Tools for converting NLP problems into ML problems: embedding layers and tokenization. Long-short-term memory RNN architectures. Bidirectional and deep RNNs. Lecture notes. Pytorch demonstration of the use of an RNN for news classification.

- Lecture 21, November 11/2024. Sequence-to-sequence modeling using encoder-decoder RNN architectures: teacher forcing, beam decoding; perplexity; seq2seq modeling using RNNs with attention. Lecture notes. For more details, see the original papers Sequence to Sequence Learning With Neural Networks and Neural Machine Translation by Jointly Learning to Align and Translate.

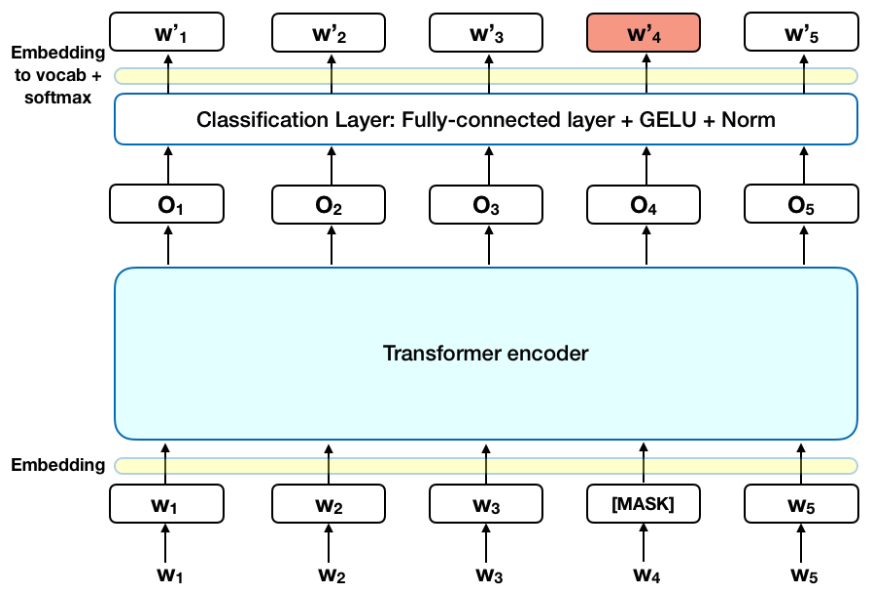

- Lecture 22, November 14/2024. Self-attention as a replacement for many-to-many RNNs, to get contextual representations. Permutation invariance of self-attention, and positional encodings. Multi-headed self-attention, to extract and combine multiple relevant forms of contextual representations. Analogies between transformer layers and MLP and CNN layers. The encoder transformer. The BERT (Bidirectional Encoder Representations for Transformers) language model. Unsupervised pretraining and supervised fine-tuning. Lecture notes. The original papers: Attention is all you need, the paper that introduced Transformers as seq2seq models, and BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, the paper that used the encoder transformer block for language modeling.

- Lecture 23, November 18/2024. Sequence-to-sequence modeling using Encoder-Decoder Transformer architecture. Cross-attention to find contextual representations of one sequence given the other sequence. Masked self-attention to get contextual representations based only on portions of the input sequence; application to causal self-attention. Decoder Transformer blocks. GPT language models. Lecture notes. The original papers: Improving Language Understanding by Generative Pre-training, the GPT-1 paper; Language Models are Unsupervised Multitask Learners, the GPT-2 paper; and Language Models are Few-Shot Learners, the GPT-3 paper. And if you're interested in getting a high-level overview of the state of LLMs, see this survey on LLMs (from February 2024).

- Lecture 24, November 21/2024. Generative modeling: learning the distribution of training samples, and generating new samples from that distribution. Latent space approaches. Generative Adversarial Networks (GANS): generators and discriminators, min-max optimization. Lecture notes. The original papers: Generative Adversarial Networks, the original GANs paper; Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks, the DCGANs (Deep convolutional GANs) paper that introduced an influential transposed convolutional architecture for the generator in image tasks; Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks, the CyleGAN paper that introduced the concept of cycle-losses for mapping images between different domains while retaining their content.

- Lecture 25, December 2/2024. KL-divergence and reverse KL-divergence, and their use in variational approximation. Latent generative models and variational autoencoders (VAEs): encoders, decoders, and their architectures. The Evidence Lower Bound (ELBO), and ts interpretation as a sum of a negative reconstruction loss and a semantic regularizer. The reparameterization trick. Lecture notes. Supplemental reading: Auto-Encoding Variational Bayes, the original VAE paper.

- Lecture 26, December 5/2024. In class project presentations.

- Lecture 27, December 9/2024. In class project presentations.

Homeworks and Weekly Participation

Homework/Participation submission link: pdfs and Jupyter notebooks only, 5MB limit

Late assignments will not be accepted, unless you contact the instructor at least two days before the due date to receive a deferral. Deferrals will be granted at the instructor’s discretion, of course.

- Weekly Participation 1. Due 9/3/2024.

- Weekly Participation 2. Due 9/12/2024.

- Weekly Participation 3. Due 9/19/2024.

- Weekly Participation 4. Due 9/26/2024.

- Weekly Participation 5. Due 10/3/2024.

- Weekly Participation 6. Due 10/10/2024.

- Weekly Participation 7. Due 10/17/2024. (Be aware there are no office hours on October 14).

- Weekly Participation 8. Due 10/24/2024.

- Weekly Participation 9. Due 11/1/2024.

- Weekly Participation 10. Due 11/7/2024.

- Weekly Participation 11. Due 11/14/2024.

- Weekly Participation 12. Due 11/21/2024.

- Homework 1. Assigned Thursday September 12, 2024. Due by 11:59pm ET Thursday September 26, 2024.

- Homework 2. Assigned Thursday September 26, 2024. Due by 11:59pm ET Thursday October 10, 2024.

- Homework 3. Assigned Thursday October 10, 2024. Due by 11:59pm ET Thursday October 24, 2024.

- Homework 4. Assigned Thursday October 24, 2024. Due by 11:59pm ET Thursday November 7, 2024.

- Homework 5. Assigned Thursday November 7, 2024. Due by 11:59pm ET Thursday November 21, 2024.

Project

In teams of up to five, you will present either an original research project or an exposition on a topic relevant to the course. See the project page for more details and deadlines. Your group assignments will be posted to Piazza.

Supplementary Materials

For your background reading, if you are unfamiliar with the linear algebra and probability being used:- Introduction to Applied Linear Algebra: Vectors, Matrices, and Least Squares. Boyd and Vandenberghe.

- Jeff Erickson's notes on discrete probability. Erickson.

- Introduction to Probability, Statistics, and Random Processes. Pishro-Nik.

- Chapter 3 of "Deep Learning". Goodfellow, Bengio, and Courville.

- Chapter 1 of "Bayesian Reasoning and Machine Learning". Barber.

- Convexity and Optimization. Lecture notes by R. Tibshirani.

- Optimization for Machine Learning. Lecture notes by E. Hazan.

- Optimization Methods for Large-scale Machine Learning. SIAM Review article. Bottou, Curtis, and Nocedal.

- Convex Optimization: Algorithms and Complexity. Bubeck

- Convex Optimization. Boyd and Vandenberghe.