CSCI 6962/4180 Trustworthy Machine Learning, Fall 2025

Overview

In today's world it is no longer sufficient to consider the traditional metrics of accuracy when judging the trustworthiness of systems built using machine learning. It is important to also consider the alignment, fairness, robustness, privacy, and attack surfaces of machine learning systems.

This seminar course introduces these topics to students who already have a basic understanding of machine learning. In it, you will explore fundamental questions and learn tools and methods to measure and ensure these aspects of trustworthiness in machine learning. We will delve into both seminal and recent papers to examine the growing body of research on trustworthy machine learning. The course consists of several lectures delivered by the instructor and at least one seminar by each student.

We will cover five broad areas:

- Alignment of LLMs: An AI system is well-aligned with human values when its behavior follows human norms. In this class, we consider the alignment of large language models (LLMs). How do we measure the extent to which LLMs respect human norms such as avoiding biased, toxic, unethical or illegal, or privacy-sensitive content? How can we train them to be more aligned, and what are the limitations of alignment?

- Attack Models: What are useful categorizations of the types of attacks an adversary may make upon an ML framework? Namely, what aspects of the model are targeted, for what goals, and what capabilities must the adversaries possess? And how can we defend against these attacks?

- Privacy and Confidentiality: Can we trust machine learning frameworks to have access to personal data? Can we trust the models not to reveal personal information or sensitive decision rules? How can we quantify disclosure risk?

- Robustness: In settings where training data is noisy or adversarially crafted, can we trust the algorithms to learn robust decision rules? Can we trust them to make correct predictions on adversarial or noisy testing data?

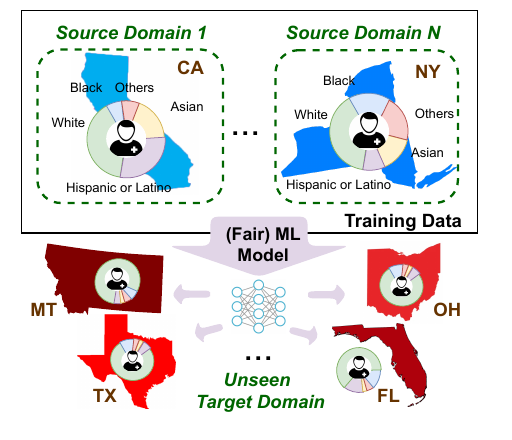

- Algorithmic Fairness: Bias affecting some groups in the population underlying a data set can arise from both a lack of representation in data but also poor choices of learning algorithms. Can we build trustworthy algorithms that remove disparities and provide fair predictions for all groups? How do we quantify fairness?

Course Logistics

The syllabus is available as an archival pdf, and is more authoritative than this website.

Instructor: Alex Gittens (gittea at rpi dot edu)

Lectures: MTh 2pm-3:50am ET in Ricketts 212

Questions, Discussions, and Course Material: Piazza

Office Hours: by appointment in Lally 316 (or you can stop by and see if I am free)

Grading Criteria:

- Seminar, 60%

- Project, 20%

- Attendance and Participation, 20% NB: attendance is mandatory

Letter grades will be computed from the semester average. Lower-bound cutoffs for the undergraduates are: A, B, C and D grades are 90%, 80%, 70%, and 60%, respectively. Lower-bound cutoffs for the graduates are: A, B, C grades are 90%, 80%, 70%. These bounds may be moved lower at the instructor's discretion.

Course Materials

The papers, presentations, lecture notes, and discussions are available in Piazza.

Project

Each student will complete a final project. Graduate students will complete a research project related to the subject matter of the course, and undergraduate students have to choice to either complete a research project or a pedagogical project. See the project page for more details.

Supplementary Materials

If you need a refresher on the ML architectures and concepts you may encounter in the assigned reading, see the lecture notes and supplementary materials from the last half of my Machine Learning and Optimization course.