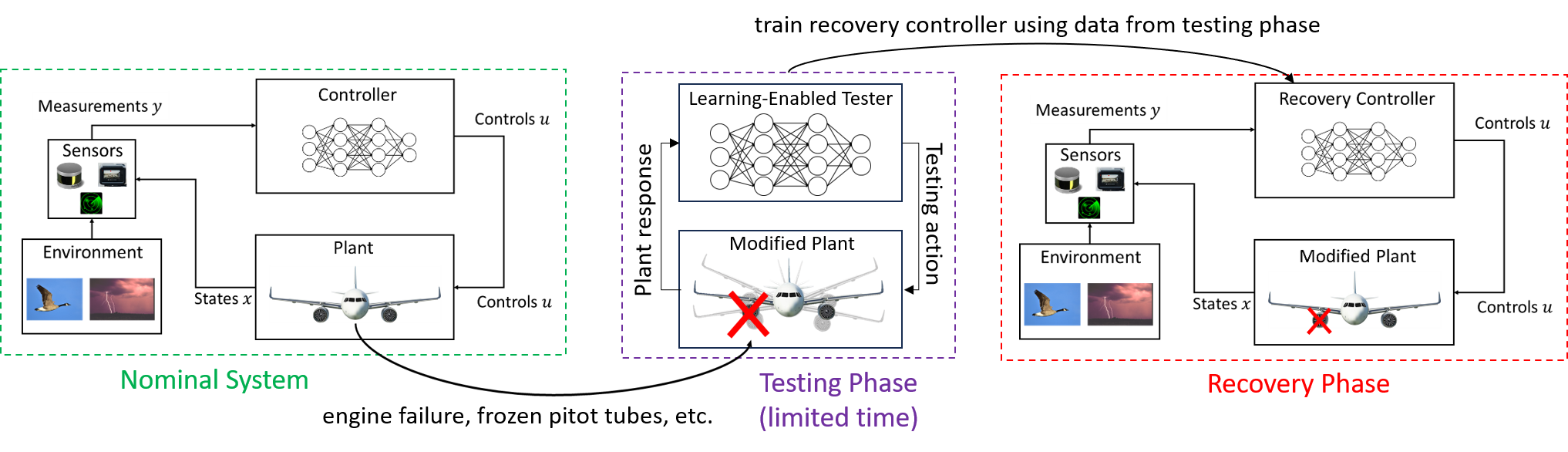

Assured Recovery: Training, Testing and Verification of Autonomous Systems with Recovery Capabilities

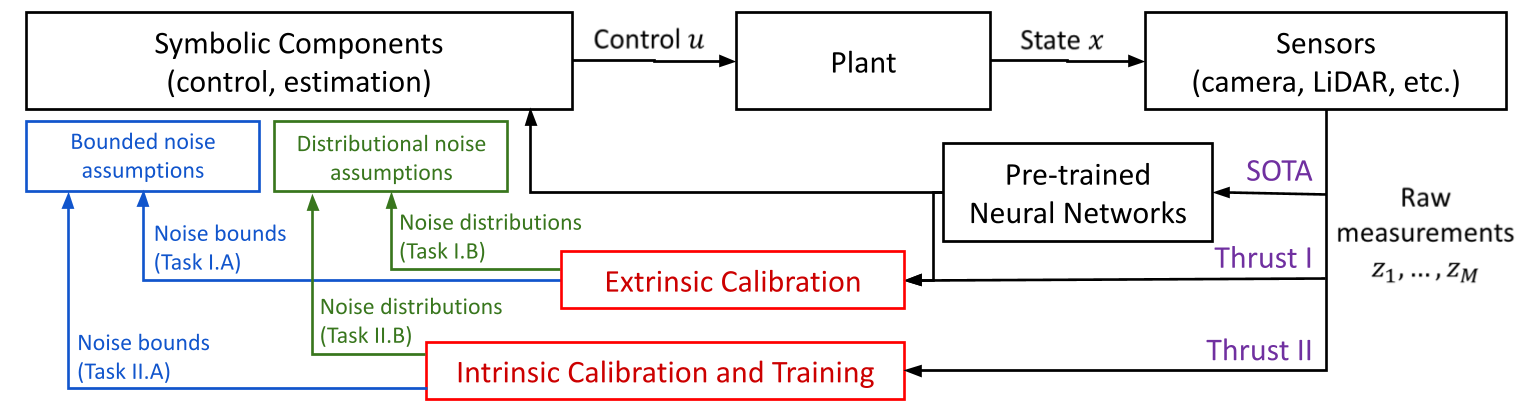

Neuro-Symbolic Bridge: From Perception to Estimation and Control

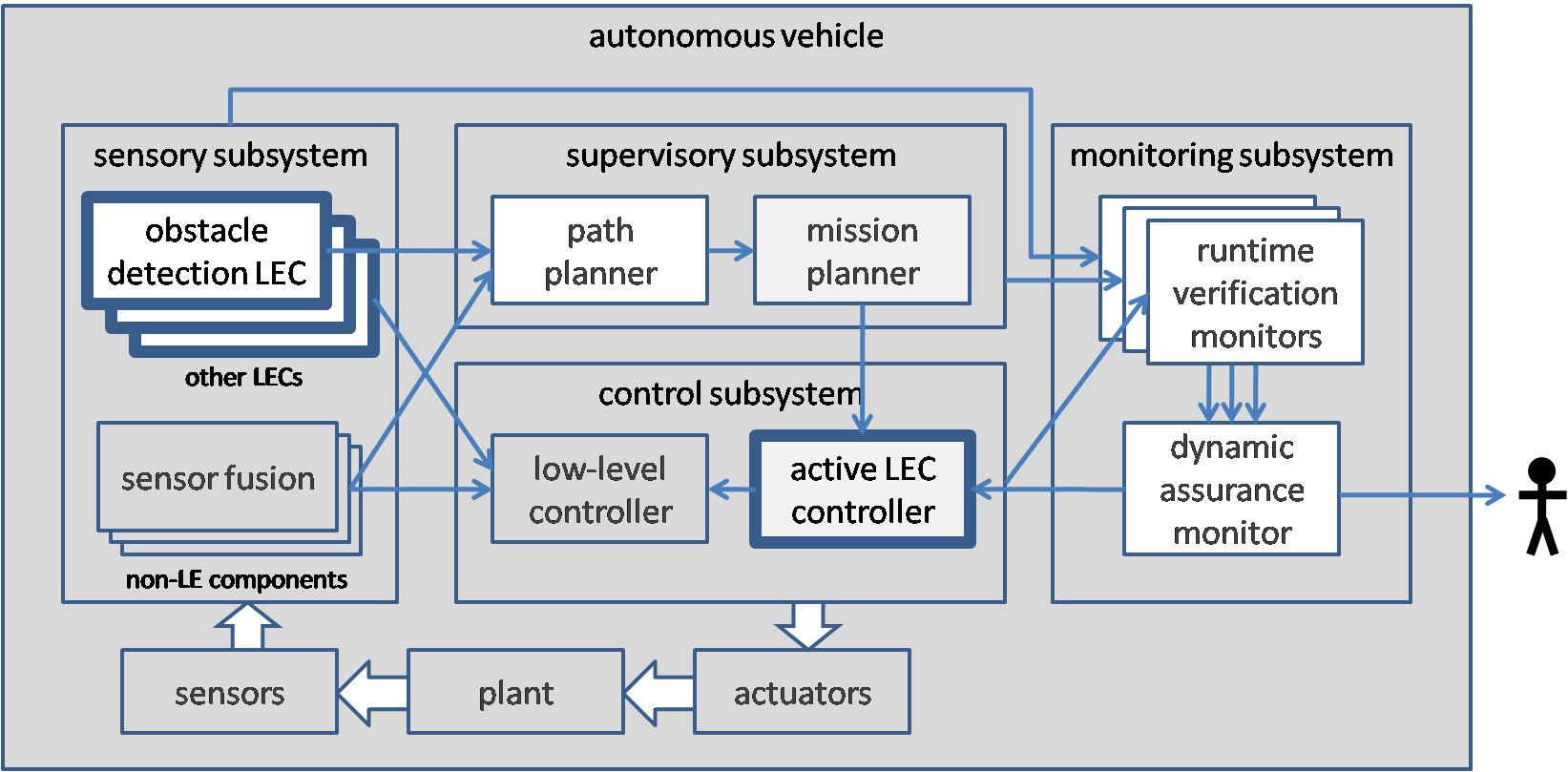

Assured Autonomy

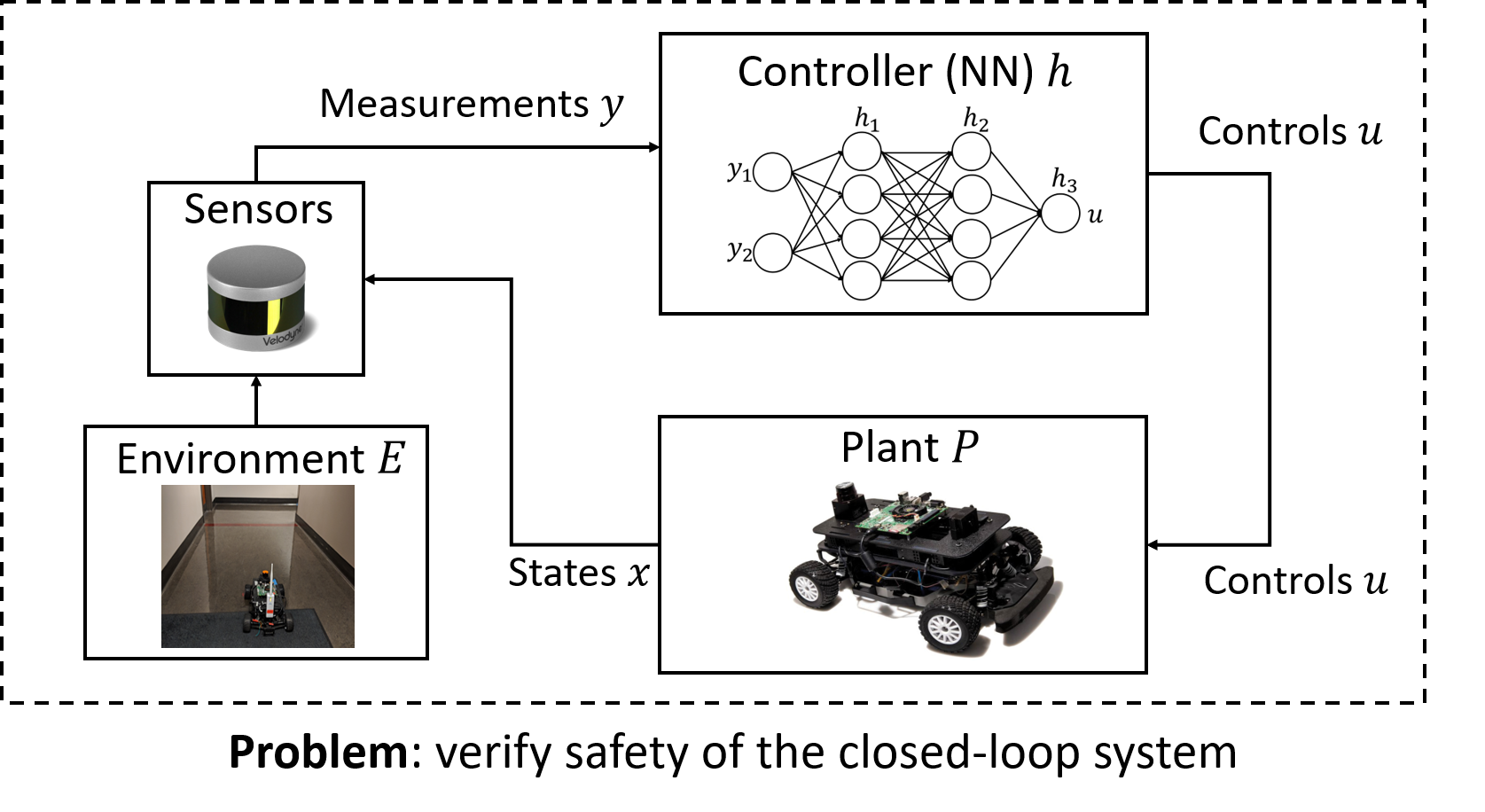

Verifying Safety of Autonomous Systems with Neural Network Components

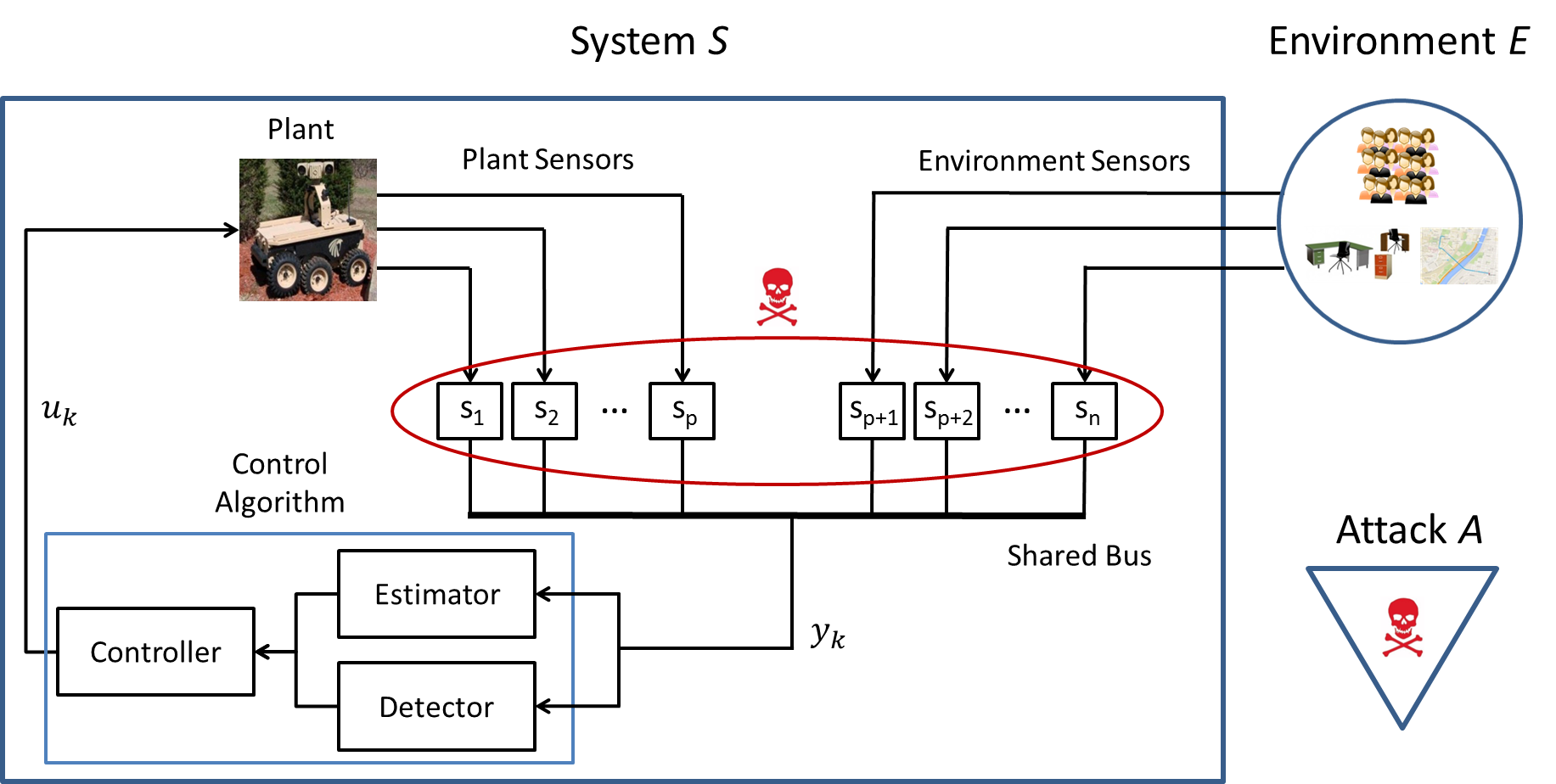

Security of Cyber-Physical Systems

The increasing autonomy and sophistication of modern CPS have led to multiple accidents in the last few years, caused not only by component failures but also by malicious attacks exploiting communication vulnerabilities, software bugs, etc. These accidents have exposed the need for a unifying theory for analyzing the safety of such systems even in the presence of faults and attacks. The main challenge for developing such techniques is that, due to the complexity of modern CPS, components might experience unexpected faults or attacks and behave in arbitrary ways. This means that no assumptions can be made about how or when components might fail.

Safety Detection Using Sensor Fusion

Sensor Attack Detection

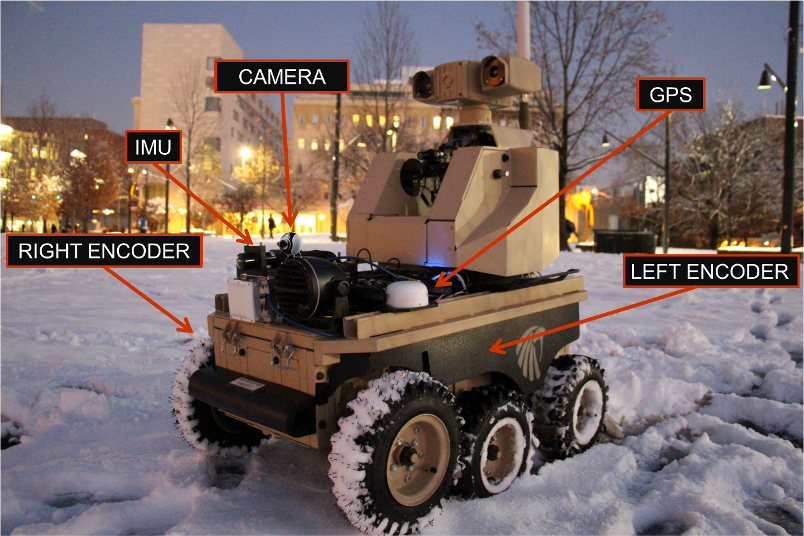

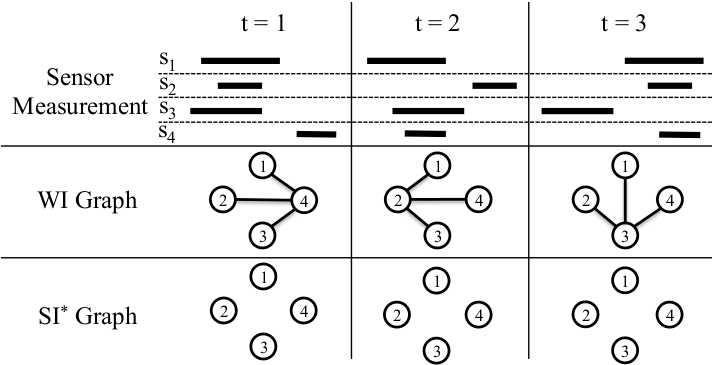

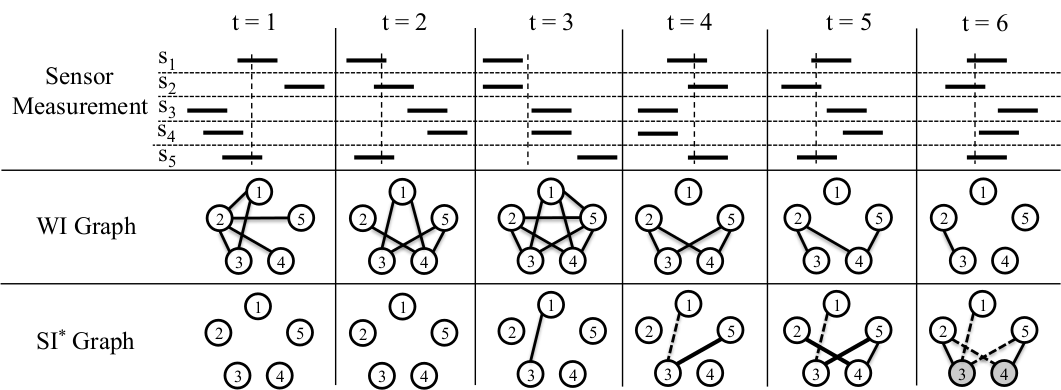

In order to further improve the output of sensor fusion (and of other components using sensor data), we also developed techniques for sensor attack detection and identification. Similar to the sensor fusion framework, in this work we also use sensor redundancy so as to avoid making unrealistic assumptions about how and when faults/attacks might occur (in existing anomaly detection works, it is either assumed that the system has a known initial condition or that the attack/fault has a known effect). Since sensors often experience transient faults that are a normal part of system operation (e.g., GPS losing its connection in a tunnel), we introduced the notion of a transient fault model for each sensor and developed an approach for identifying such models from data. Given such transient fault models, we presented an attack detection method (based on pairwise inconsistencies between sensor measurements, as shown on the right) that only flags attacks and does not raise alarms due to transient faults. We evaluated our approach using real data from the LandShark that was retrospectively augmented with various attacks -- our approach was eventually able to detect all sensor attacks.

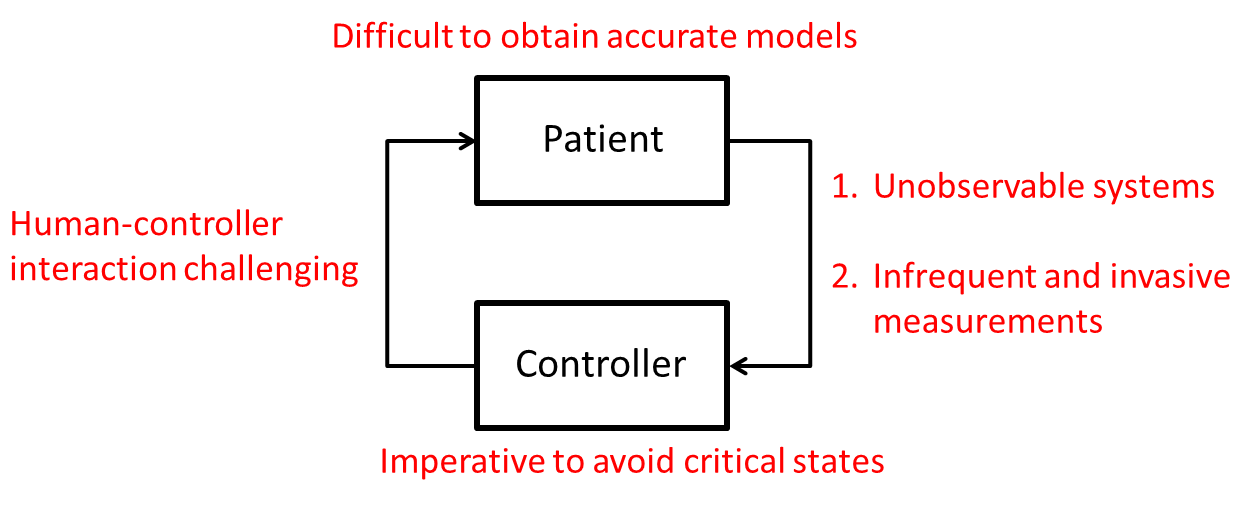

High-Assurance Medical Cyber-Physical Systems

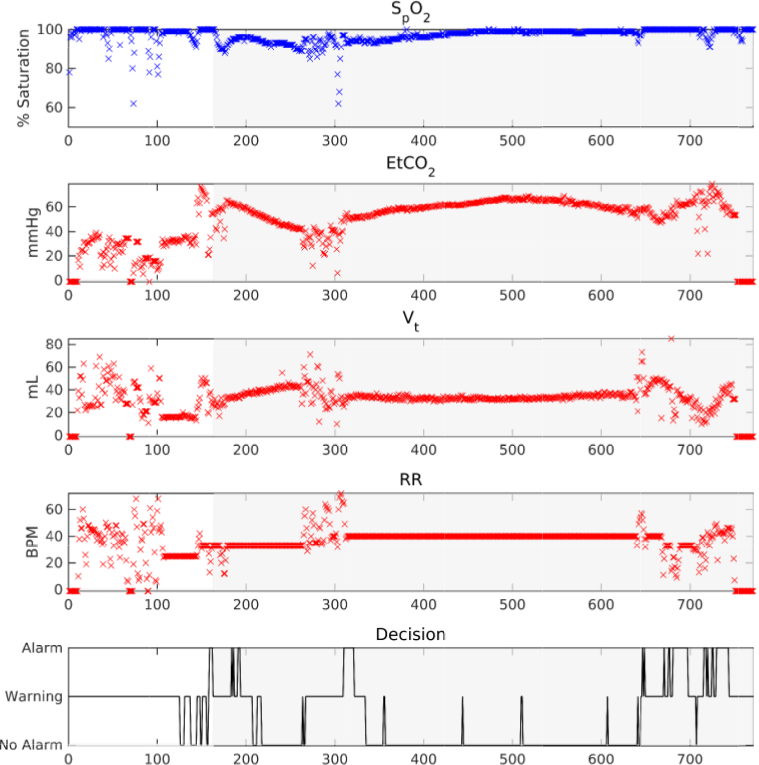

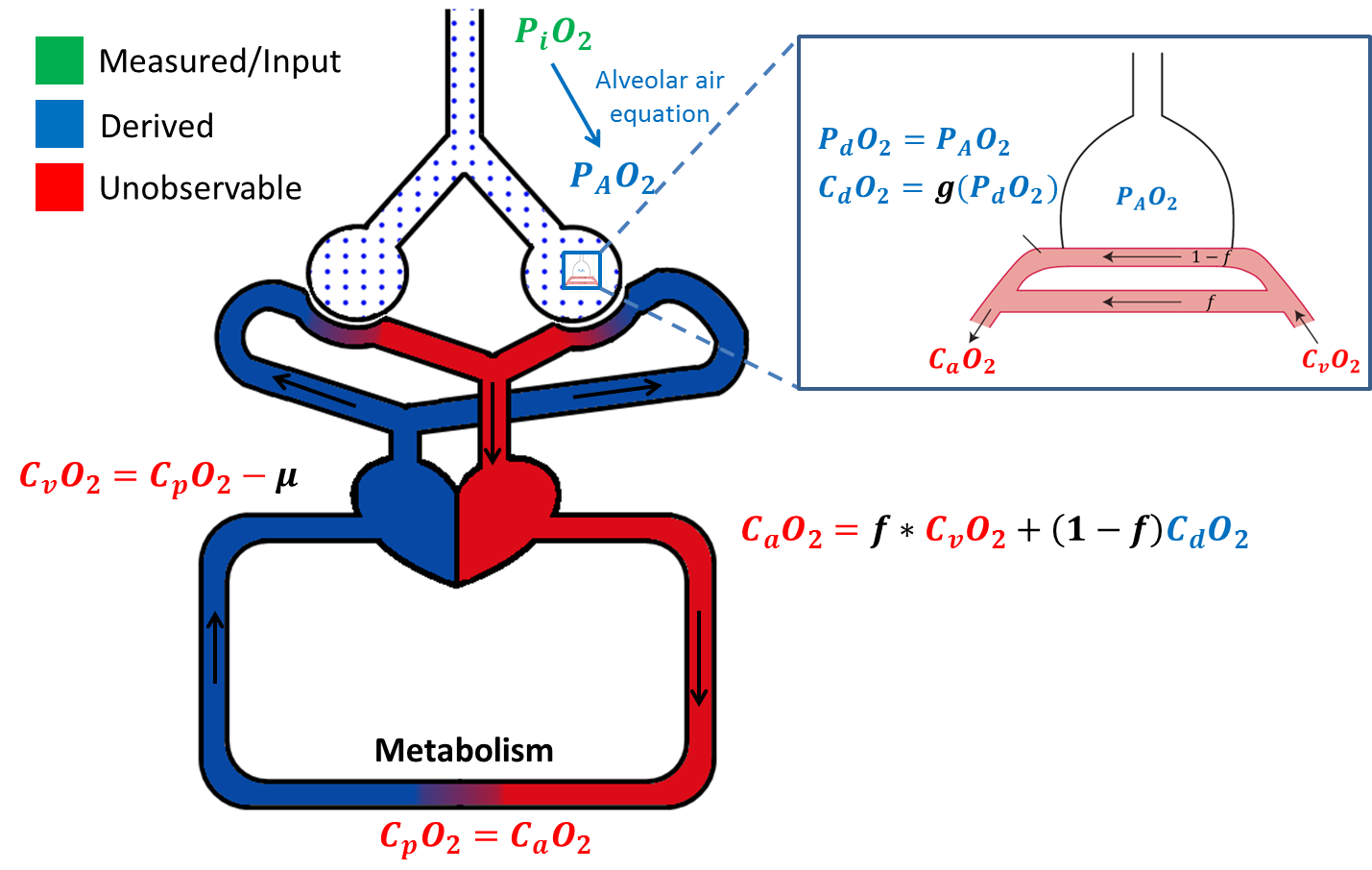

Estimation of Blood Oxygen Content Using Context-Aware Filtering

Prediction of Critical Pulmonary Shunts in Infants